SAP has been contributing to CF Abacus from late 2015.

In the beginning Abacus was IBM only project. We saw potential in the project as SAP needed solution to meter the CF usage. We decided to check it out. Me and Georgi Sabev did initial investigation and found it could fit our needs.

Once we went to use it in production a 10 person team worked on Abacus to have it as part of SAP metering, analytics and billing pipeline.

Along the way we found several significant architectural issues that made us start planing version 2 of Abacus.

MongoDB & Deployment

To enable use of Abacus in SAP we had to solve two problems: how to use CouchDB and how to install the plethora of applications.

We decided to support the more popular MongoDB and create Conourse deploy pipelines.

DB size

However we quickly discovered that by default Abacus is configured to log each change, much like financial systems do.

For every input document sent to Abacus, it stores several documents in its internal DBs:

It turned out that we do map-reduce with input:output ratio 1:10. For 1Kb doc we have 10Kb data in Abacus DBs as we keep every iteration.

As we used Abacus only for metering, to limit the impact we:

Sampling is a way to keep only one document from reduce stages for a certain period of time. This increases the risk of losing data in case of app or DB crash, but reduces the data we keep.

Back then, sharding was not available for our MongoDB service instance, so the max data we could store was 800GB. This meant input of 80GB could get us in trouble. That's why we implemented additional layer that allowed us to round-robin the Abacus data across normal MongoDB instances, until we get MongoDB supporting data in TB range.

Housekeeper was also needed to comply with legal requirements, so we went on and implemented it to also reduce the size of the DB.

One of the last things we did was to drop the redundant data. The reason for this is that it also changed reporting API output, so we considered it a breaking change.

Scalability

Once we were confident Abacus works fine we concentrated on providing smooth update experience.

Micro-services stages of Abacus are tightly bound together and they keep state. This means they can’t be scaled independently or updated via normal rolling or blue-green deploy.

To solve this problem we decided to redesign the map stages of the Abacus pipeline - the collector and meter applications and turn them into real microservices that are stateless. To externalize the state we put RabbitMQ between them.

This buffer allowed to handle spikes in input documents that would otherwise overload Abacus pipeline by distributing the load over time.

The max speed we got in real live was ~10 documents per second with ~100 applications. Again a ratio of 1:10. As the ratio hints, this is due to the DB design that requires per-organization locking in reduce stages and causes DB contention as we write in the same document.

Zero-downtime update

Scalability and update are tightly coupled. Once we added buffer in front of the reduce stages we could separate Abacus in 2 types of components:

The internal ones that were problematic before are now isolated with the buffer and we could update them with downtime, as users will not notice thier lack.

Custom functions

At this point we had a working system and could add more users.

Abacus allows great flexibility in defining metering, accumulation and aggregation functions, so we though this was going to be easy for our users.

And here was the devil in the details. This was not easy. It was terrible experience for them as it seems. An Abacus user has to:

However there were more aspects to JavaScript functions evaluated at runtime:

An example for perfectly working code in a function is allocation of big array. This passes all security checks we have added, but causes Abacus application to repeatedly crash as we retry the calculation with a exponential backoff.

Reporting

Reporting endpoint of Abacus is supposed to return already computed data. With the introduction of time-based functions this contract was broken. We need to calculate the metric in the exact time the report was requested and to call summarize function on multiple entities in the report.

For organization with several thousand spaces, each with several thousand apps this might take up to 5 minutes, while the usual requst-response cycle is hard-coded to 2 minutes (AWS, GCP, ...).

Besides that, Node.js apps do not cope well with CPU intensive work we had to interleave these calculations to allow the reporting to respond.

The generated report on the other hand can become enormous: 2GB. To customize and reduce the the report Abacus uses GraphQL. However GraphQL schema is applied only after all data is fetched and processed. This is due to the fact that we use DB schema different than the report schema.

Contributions

In the beginning Abacus was IBM only project. We saw potential in the project as SAP needed solution to meter the CF usage. We decided to check it out. Me and Georgi Sabev did initial investigation and found it could fit our needs.

Once we went to use it in production a 10 person team worked on Abacus to have it as part of SAP metering, analytics and billing pipeline.

Along the way we found several significant architectural issues that made us start planing version 2 of Abacus.

MongoDB & Deployment

To enable use of Abacus in SAP we had to solve two problems: how to use CouchDB and how to install the plethora of applications.

We decided to support the more popular MongoDB and create Conourse deploy pipelines.

DB size

However we quickly discovered that by default Abacus is configured to log each change, much like financial systems do.

For every input document sent to Abacus, it stores several documents in its internal DBs:

- original document

- normalized document

- collector document (from previous step)

- doc with applied metering function

- metered doc (from previous step)

- doc with applied accumulator fn

- accumulated doc (from previous step)

- doc with applied aggregated fn

It turned out that we do map-reduce with input:output ratio 1:10. For 1Kb doc we have 10Kb data in Abacus DBs as we keep every iteration.

As we used Abacus only for metering, to limit the impact we:

- configured SAMPLING

- implemented app-level sharding

- add housekeeper app that deletes data older than 3 months

- drop pricing and rating functions and data from documents

Sampling is a way to keep only one document from reduce stages for a certain period of time. This increases the risk of losing data in case of app or DB crash, but reduces the data we keep.

Back then, sharding was not available for our MongoDB service instance, so the max data we could store was 800GB. This meant input of 80GB could get us in trouble. That's why we implemented additional layer that allowed us to round-robin the Abacus data across normal MongoDB instances, until we get MongoDB supporting data in TB range.

Housekeeper was also needed to comply with legal requirements, so we went on and implemented it to also reduce the size of the DB.

One of the last things we did was to drop the redundant data. The reason for this is that it also changed reporting API output, so we considered it a breaking change.

Scalability

Once we were confident Abacus works fine we concentrated on providing smooth update experience.

Micro-services stages of Abacus are tightly bound together and they keep state. This means they can’t be scaled independently or updated via normal rolling or blue-green deploy.

To solve this problem we decided to redesign the map stages of the Abacus pipeline - the collector and meter applications and turn them into real microservices that are stateless. To externalize the state we put RabbitMQ between them.

This buffer allowed to handle spikes in input documents that would otherwise overload Abacus pipeline by distributing the load over time.

The max speed we got in real live was ~10 documents per second with ~100 applications. Again a ratio of 1:10. As the ratio hints, this is due to the DB design that requires per-organization locking in reduce stages and causes DB contention as we write in the same document.

Zero-downtime update

Scalability and update are tightly coupled. Once we added buffer in front of the reduce stages we could separate Abacus in 2 types of components:

- user-facing: collector, RabbitMQ, meter, plugins, reporting

- internal: accumulator, aggregator

The internal ones that were problematic before are now isolated with the buffer and we could update them with downtime, as users will not notice thier lack.

Custom functions

At this point we had a working system and could add more users.

Abacus allows great flexibility in defining metering, accumulation and aggregation functions, so we though this was going to be easy for our users.

And here was the devil in the details. This was not easy. It was terrible experience for them as it seems. An Abacus user has to:

- have basic JavaScript knowledge

- understand what all of the functions do

- understand how data between functions flow

- time-window concepts

- try the function on a real system

However there were more aspects to JavaScript functions evaluated at runtime:

- Node.js was never meant to support this

- Slow execution (close to second per call)

- No clean security model

An example for perfectly working code in a function is allocation of big array. This passes all security checks we have added, but causes Abacus application to repeatedly crash as we retry the calculation with a exponential backoff.

Reporting

Reporting endpoint of Abacus is supposed to return already computed data. With the introduction of time-based functions this contract was broken. We need to calculate the metric in the exact time the report was requested and to call summarize function on multiple entities in the report.

For organization with several thousand spaces, each with several thousand apps this might take up to 5 minutes, while the usual requst-response cycle is hard-coded to 2 minutes (AWS, GCP, ...).

Besides that, Node.js apps do not cope well with CPU intensive work we had to interleave these calculations to allow the reporting to respond.

The generated report on the other hand can become enormous: 2GB. To customize and reduce the the report Abacus uses GraphQL. However GraphQL schema is applied only after all data is fetched and processed. This is due to the fact that we use DB schema different than the report schema.

Contributions

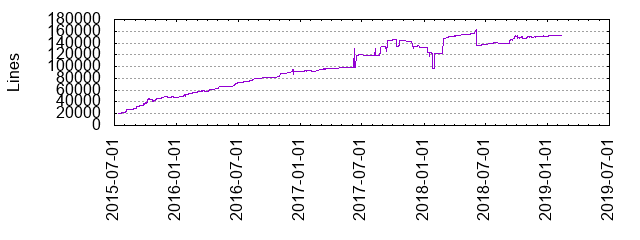

SAP has contributed lots of code trying to stabilize Abacus.

The LOC statistics below shows the introducing RabbitMQ buffer and removing CouchDB support, rating and pricing between 2017 and 2019:

|

| Lines of code |

|

| Commits |

For most of the issues we had we ended up blaming several architecture concepts that did not fit our use case and requirements. We managed to fix or work-around some of the most pressing ones such as update and scalability.

Architecture problems

However some of the high level problems we still face with current May 2019 Abacus codebase:

- reduce stages of Abacus are tightly bound together, they keep state, can’t be scaled independently; complex handling of rollbacks in case errors occur

- DoS vulnerability: API allows definition of metering plans as node.js coding

- Query API (GraphQL) limited to service level, but does not support service instance level

- Open source community: IBM no longer contributes to Abacus, SAP remains only contributor

- API tailored to CF (org/space), does not fit to SAP CP domain model (global account/subaccount)

- Components communicate via HTTPS, going through the standard CF access flow (Load Balancer, HA, GoRouter) which is sub-optimal and can lead to excess network traffic

- The MongoDB storage used cannot scale horizontally, leading to manual partitioning with multiple service instances (data is not balanced over MongoDB instances)

- Abacus service discovery model for reduce stages is orthogonal to CF deployment model, resulting in slow updates and development cycles

- Users can send and store arbitrary data in Abacus (with various size and structure), making it hard to commercialize

- Users have a hard time understanding, designing, and getting plan functions to work correctly

- Reports can take forever to construct and can lead to OOM

- If users abuse the metering model, their aggregation documents can surpass the MongoDB limit of 16 MB leading to failures

- accumulator and aggregator apps rely that they are singleton. Should at any given point there be two instances (which can happen because of network issues), accumulated data can become incorrect

Abacus v2

All of the architectural issues above and the lack of pressing issues leads us to think that we have to start v2 implementation.

Momchil Atanasov started redesining and creating new concepts for this version. Some of them:

- Custom Metering Language (instead of JS functions)

- Hierarchical Accumulation - allows monthly accumulations through daily accumulations

- Usage Sampler - replace time-based functions with usage sampling

Momchil created 2 prototypes that validated these ideas. Using PostgreSQL he managed to get to ~800 docs/sec and using Cassandra he got up to 4000 docs/sec.

The project however was put on hold and replaced with internal SAP solutions to allow among others:

- reduce TCO

- reuse exising software that already do metering

- support SAP CP domain model

- metering CF, K8s and custom infrastructure

No comments:

Post a Comment